This is a followup to

my previous blog post about DataDog billing.

TL;DR:

- I don't recommend DataDog,

- dealing with unhappy customers is hard,

- monitoring for data science nerds?

Hacker News CommentsDataDog at Google Cloud Summit

I was recently at the

Seattle Google Cloud Summit and

DataDog was well represented, with the biggest booth and top vendor billing during the keynote. Clearly they are doing something right. I had a past

unpleasant experience with them, and I had just been auditing my records and discovered that last year DataDog had actually charged me a

lot more than I thought, so was kind of annoyed. Nonetheless, they kept coming up and talking to me, server monitoring is life-and-death important to me, and their actual software is very impressive in some ways.

Nick Parisi started talking with me about DataDog. He sincerely wanted to know about my past experience with monitoring and the DataDog product, which he was clearly very enthuisiastic about. So I told him a bit, and he encouraged me to tell him more, explaining that he did not work at DataDog last year, and that he would like to know what happened. So he gave me his email, and he was

very genuinely concerned and helpful, so I sent him an email with a link to my post, etc. I didn't receive a response, so a week later I asked why, and received a followup email from Jay Robichau, who is DataDog's Director of Sales.

Conference Call with DataDog

Jay setup a conference call with me today at 10am (September 22, 2017). Before the call, I sent him a summary of my blog post, and also requested a refund, especially for the suprise bill they sent me nearly 6 weeks after my post.

During the call, Jay explained that he was "protecting" Nick from me, and that I would mostly talk with Michelle Danis who is in charge of customer success. My expectation for the call is that we would find some common ground, and that they would at least appreciate the chance to make things right and talk with an unhappy customer. I was also curious about how a successful startup company addresses the concerns of an unhappy customer (me).

I expected the conversation to be difficult but go well, with me writing a post singing the praises of the charming DataDog sales and customer success people. A few weeks ago

CoCalc.com (my employer) had a very unhappy customer who got (rightfully) angry over a miscommunication, told us he would no longer use our product, and would definitely not recommend it to anybody else. I wrote to him wanting to at least continue the discussion and help, but he was completely gone.

I would do absolutely anything I could to ensure he is a satisfied, if only he would give me the chance. Also, there was

a recent blog post from somebody unhappy with using CoCalc/Sage for graphics, and I reached out to them as best I could to at least clarify things...

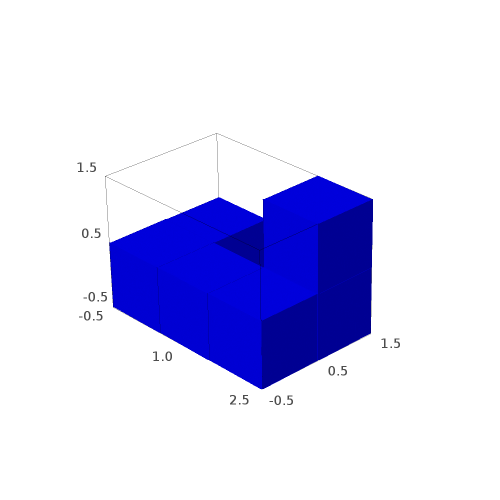

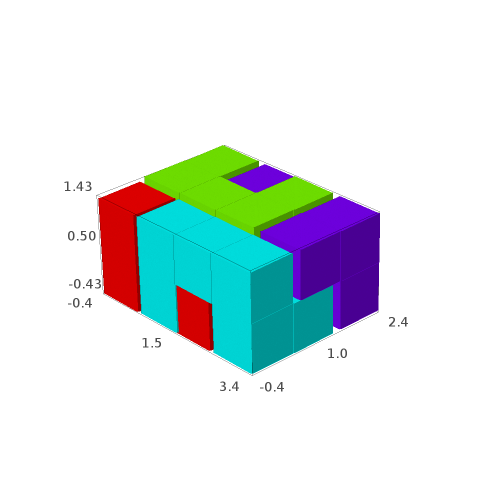

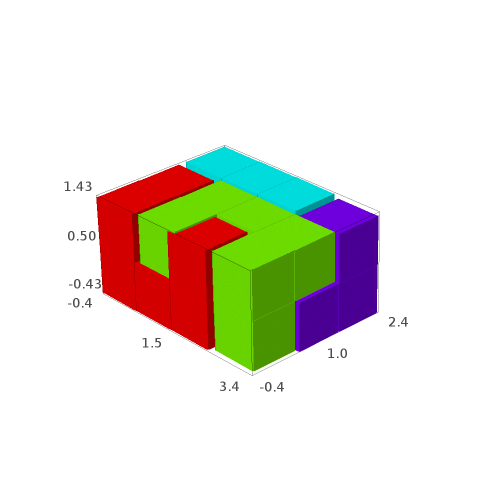

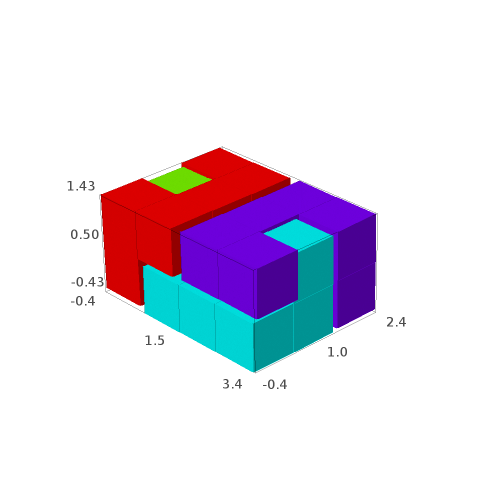

In any case, here's what DataDog charged us as a result of us running their daemon on a few dozen containers in our Kubernetes cluster (a contractor who is not a native English speaker actually setup these monitors for us):

07/22/2016 449215JWJH87S8N4 DATADOG 866-329-4466 NY $639.19

08/29/2016 2449215L2JH87V8WZ DATADOG 866-329-4466 NY $927.22

I was shocked by the 07/22 bill which spured my post, and discovered the 8/29 one only later. We canceled our subscription on July 22 (cancelling was difficult in itself).

Michelle started the conference call by explaining that the 08/29 bill was for charges incurred before 07/22, and that

their billing system has over a month lag (and it does even today, unlike Google Cloud Platform, say). Then Michelle explained at length many of the changes that DataDog has made to their software to address the issues I (and others?) have pointed out with their pricing description and billing. She was very interested in whether I would be a DataDog customer in the future, and when I said no, she explained that they would not refund my money since the bill was not a mistake.

I asked if they now provide a periodic summary of the upcoming bill, as Google cloud platform (say) does. She said that today they don't, though they are working on it. They do now provide a summary of usage so far in an admin page.

Finally, I explained in no uncertain terms that I felt misled by their pricing. I expected that they would understand, and pointed out that they had just described to me many ways in which they were addressing this very problem. Very surprisingly, Michelle's response was that she absolutely would not agree that there was any problem with their pricing description a year ago, and they definitely would not refund my money. She kept bringing up the terms of service. I agreed that I didn't think legally they were in the wrong, given what she had explained, just that -- as they had just pointed out -- their pricing and billing was unclear in various ways. They would not agree at all.

I can't recommend doing business with DataDog. I had very much hoped to write the opposite in this updated post. Unfortunately, their pricing and terms are still confusing today compared to competitors, and they are unforgiving of mistakes. This dog bites. (

Disclaimer: I took notes during the call, but most of the above is from memory, and I probably misheard or misunderstood something. I invite comments from DataDog to set the record straight.)

Also, for what it is worth, I definitely

do recommend Google Cloud Platform. They put in the effort to do many things right regarding clear billing.

How do Startups Deal with Unhappy Customers?

I am very curious about how other

startups deal with unhappy customers. At CoCalc we have had very few "major incidents" yet... but I want to be as prepared as possible. At the Google Cloud Summit, I went to some amazing "war storries by SRE's" session in which they talked about situations they had been in years ago in which their decisions meant the difference between whether they would have a company or job tomorrow or not. These guys clearly have amazing instincts for when a problem was do-or-die serious and when it wasn't. And their deep preparation "in depth" was WHY they were on that stage, and a big reason why older companies like Google are still around. Having a strategy for addressing very angry customers is surely just as important.

Google SRE's: these guys are serious.

I mentioned my DataDog story to a long-time Google employee there (16 years!) and he said he had recently been involved in a similar situation with Google's Stackdriver monitoring, where the bill to a customer was $85K in a month just for Stackdriver. I asked what Google did, and he said they refunded the money, then worked with the customer to better use their tools.

There is of course no way to please all of the people all of the time. However, I genuinely feel that I was ripped off and misled by DataDog, but I have the impression that Jay and Michelle honestly view me as some jerk trying to rip them off for $1500. And they probably hate me for telling you about my experiences.

So far, with CoCalc we charge customers in advance for any service we provide, so less people are surprised by a bill. Sometimes there are problems with recurring subscriptions when a person is charged for the upcoming month of a subscription, and don't want to continue (e.g., because their course is over), we always fully refund the charge. What does your company do? Why? I do worry that our billing model means that we miss out on potential revenue.

We all know what successful huge consumer companies like Amazon and Wal-Mart do.

Monitoring for Data Science Nerds?

I wonder if there is interest in a service like DataDog, but targeted at Data Science Nerds, built on CoCalc, which provides hosted collaborative Jupyter notebooks with Pandas, R, etc., pre-installed.

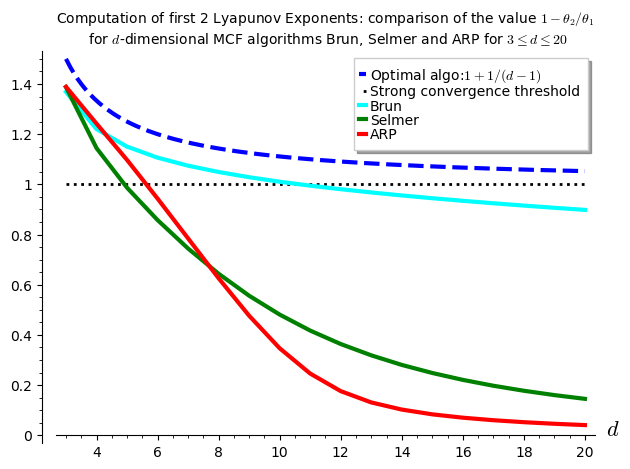

This talk at PrometheusCon 2017 mostly discussed the friction that people face moving data from Prometheus to analyze using data science tools (e.g., R, Python, Jupyter). CoCalc provides a collaborative data science environment, so if we were to smooth over those points of friction, perhaps it could be useful to certain people. And

much more efficient...---